EchoTune

AI-powered smart alarm for personalized time management

Overview

EchoTune is a smart alarm device designed to help users manage time and wake up more intentionally without relying on their phone. Instead of fixed alarm sounds, the device interprets spoken tasks, understands urgency, and responds with personalized music and lighting.

The project explores how LLMs can be embedded into everyday devices to translate natural language into structured decisions and real-world actions.

Role: Designer & Developer

Context: Design Technology Project at Cornell Tech

Focus: AI system design, LLM integration, IoT interaction, human-centered UX

Problem

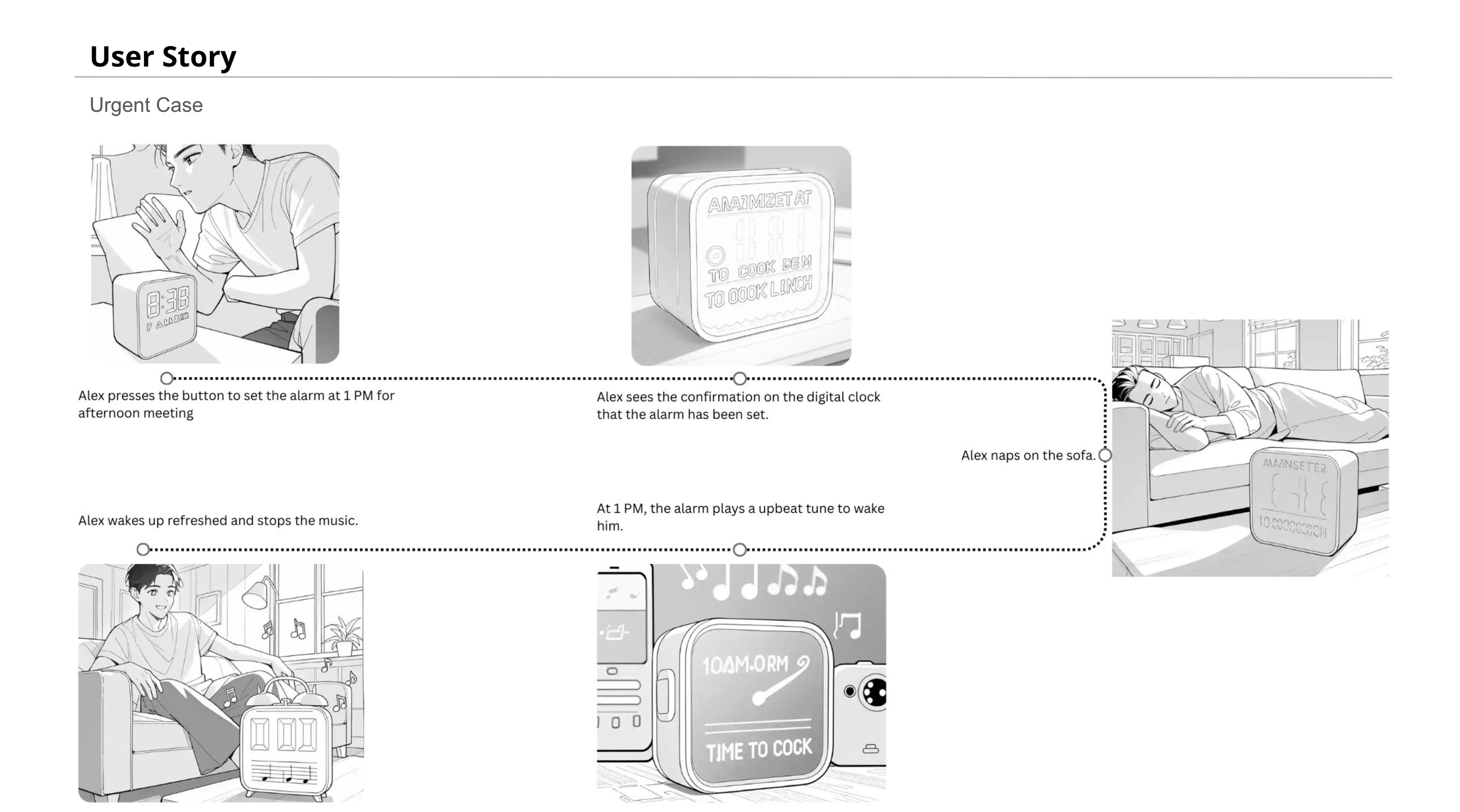

People don't wake up for the same reason every day. Some mornings are routine, while others are tied to important or time-sensitive activities. However, most alarm systems treat all wake-ups the same.

Alarms rely on fixed sounds or schedules and don't account for what the user is waking up for. This disconnect often leads to wake-ups that feel either too abrupt or not motivating enough, regardless of the situation.

Solution

EchoTune acts as a lightweight AI assistant embedded in a physical device.

Users press a button and speak an event (e.g., "I have an interview at 5 PM"). The system interprets the input, determines urgency, and adjusts music and lighting accordingly.

Core behavior:

- Spoken input is transcribed using Google Speech Recognition

- A large language model (GPT-4) extracts the activity, standardizes the time, and classifies urgency

- Urgent tasks trigger fast-paced music with blinking lights

- Non-urgent tasks trigger calming music with steady lighting

- Music and light fade in and out gradually for comfort

Additional features such as lamp mode and an adjustable structure support everyday use beyond alarms.

AI System Design

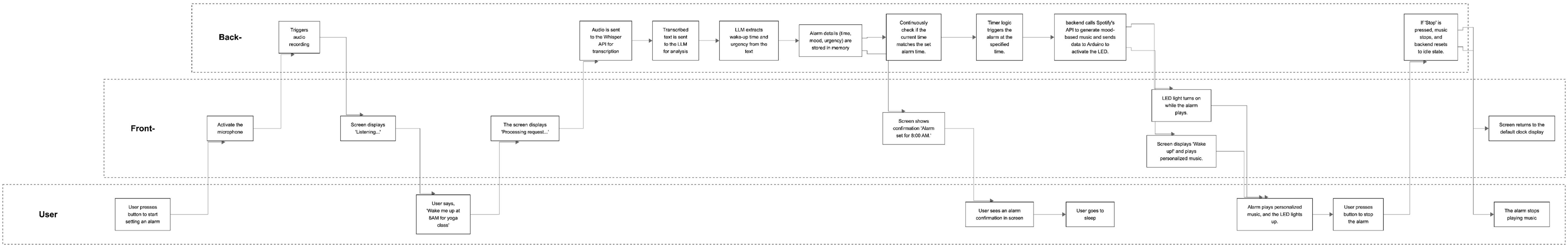

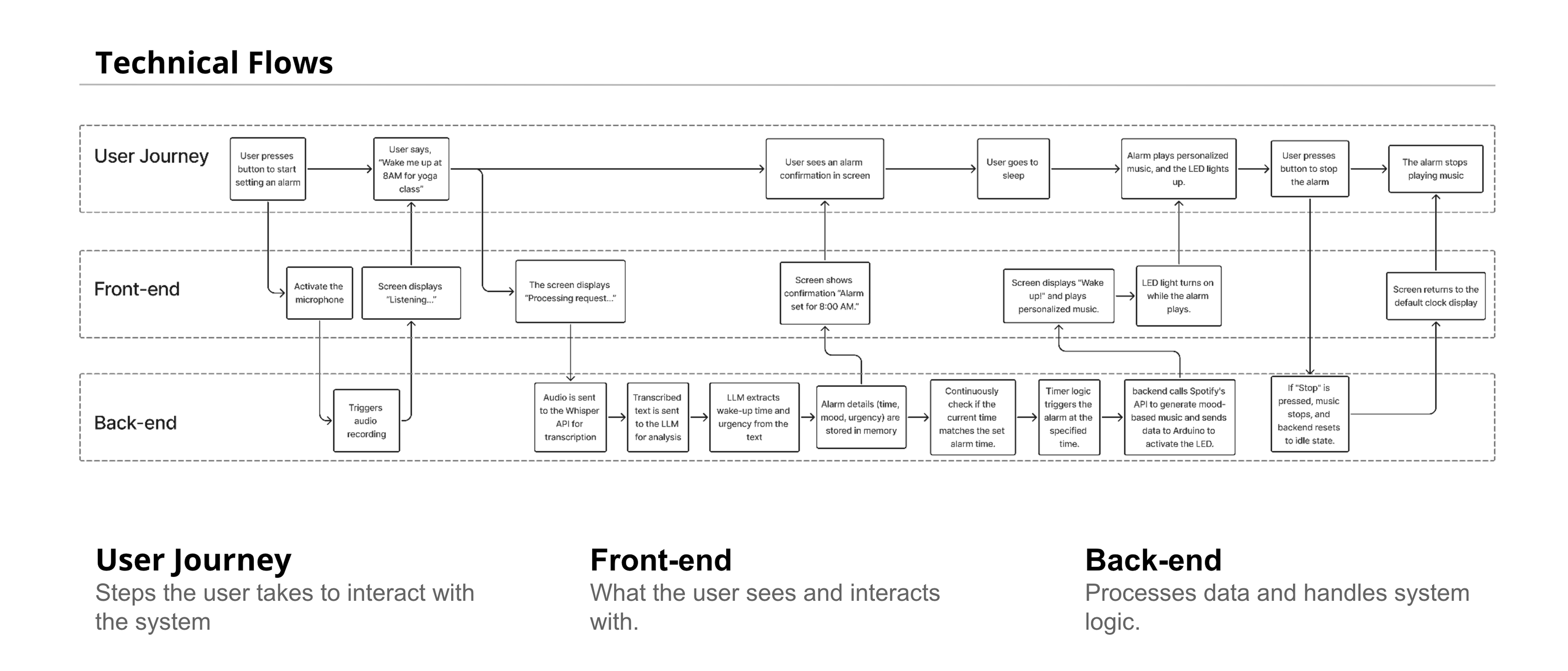

Rather than treating AI as a black box, the system was designed as a clear decision pipeline that converts natural language into deterministic actions.

Speech-to-text — Spoken input is converted into text using Google Speech Recognition.

LLM-based intent understanding — GPT-4 extracts the main activity, normalizes time expressions (e.g., "six thirty in the morning"), and reformats tasks into a consistent structure.

Urgency classification — The extracted activity is classified as urgent or non-urgent using keyword-based logic informed by the LLM output. This keeps behavior explainable and predictable.

Action orchestration — Based on urgency:

- Spotify API plays either energetic or soothing music

- LEDs display blinking or steady light patterns

- Arduino controls hardware behavior in real time

AI output directly drives system behavior, not just text generation.

Technical Implementation (High-Level)

- LLM: OpenAI GPT-4 (task extraction, time normalization, urgency signal)

- Speech: Google Speech Recognition API

- Music: Spotify API

- Hardware: Arduino Uno & Leonardo (buttons, LEDs, LCD)

- UI: Tkinter dashboard for system state and debugging

This abstraction focuses on system behavior and orchestration rather than low-level code.

Final Design

Impact

- Demonstrated how LLMs can support natural language understanding in IoT devices

- Enabled urgency-aware personalization instead of fixed alarms

- Reduced reliance on phones for planning and reminders

- Improved comfort through gradual transitions and adjustable physical design

What I'd Do Next

After collecting data across real usage, the next step would be to move beyond keyword-based urgency logic.

Future iterations would:

- Introduce learned urgency classification based on user behavior

- Incorporate feedback to personalize urgency thresholds

- Refine lighting and music selection for long-term comfort

This would allow the system to transition from rule-based decisions to adaptive intelligence, while preserving explainability and user trust.

Skills & Tools

- Large Language Models (GPT-4) — intent extraction and task classification

- AI System Design — translating language into real-world actions

- Voice Interfaces — Google Speech API

- API Integration — Spotify playback orchestration

- IoT & Hardware — Arduino, LEDs, LCD, physical interaction

- UX & Prototyping — human-centered design for everyday routines